Diffusion-Based Voice Conversion With Fast Maximum Likelihood Sampling Scheme

Under review as a conference paper at ICLR 2022, pdf (code will be made publicly available shortly)

Abstract

Voice conversion is a common speech synthesis task which can be solved in different ways depending on a particular real-world scenario. The most challenging one often referred to as one-shot many-to-many voice conversion consists in copying target voice from only one reference utterance in the most general case when both source and target speakers do not belong to the training dataset. We present a scalable high-quality solution based on diffusion probabilistic modeling and demonstrate its superior quality compared to state-of-the-art one-shot voice conversion approaches. Moreover, focusing on real-time applications, we investigate general principles which can make diffusion models faster while keeping synthesis quality at a high level. As a result, we develop a novel Stochastic Differential Equations solver suitable for various diffusion model types and generative tasks as shown through empirical studies and justify it by theoretical analysis.

1 New Maximum Likelihood SDE Solver

| Euler-Maruyama | Probability Flow | Maximum Likelihood (τ = 0.5) | Maximum Likelihood (τ = 1.0) |

|

|

|

|

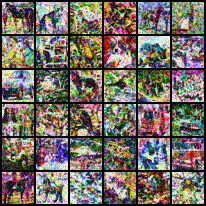

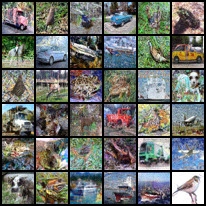

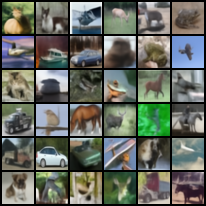

Figure 1. CIFAR10 images randomly sampled from the same DPM using only 10 iterations of reverse diffusion with different solvers. For Maximum Likelihood Sampling τ denotes the step when our new scheme was turned on (standard Euler-Maruyama solver is used when t > τ).

Standard Euler-Maruyama method usually used to solve reverse diffusion SDE in DPMs introduces large discretization error when the number of iterations is extremely small and, generally speaking, is biased. As a consequence DPM output distribution is not the same as data distribution even when score function is trained till optimality. Instead, we propose to use a novel solver that is optimal in terms of likelihood of forward diffusion trajectories. Figure 1 shows that it significantly outperforms standard DPM sampling techniques when the number of solver steps is small.

Our Fast Maximum Likelihood Solver does not require:

- Additional computational costs

- Exhaustive noise schedule search for small number of iterations

- Re-training of the DPM

Check out a quick guide (Google Colab, in progress) on CIFAR10 how to add our solver to your DPM.

2 One-shot Many-to-Many (Any-to-Any) Voice Conversion

In this section we provide a subset of audio samples used in Mean Opinion Score evaluation. We compare baselines with our diffusion-based models trained on VCTK and LibriTTS datasets (named as Diff-VCTK and Diff-LibriTTS respectively) as well as show the quality achieved using different sampling schemes (denoted as solver-N, where N is the number of iterations, EM is used for Euler-Maruyama solver, PF for Probability Flow, ML for our Maximum Likelihood solver).

| Source | Target | ||

| AGAIN-VC | FragmentVC | VQMIVC | |

| Baselines: | |||

| BNE-PPG-VC | |||

| ML-6 | ML-30 | ||

| Diff-VCTK: | |||

| EM-6 | PF-6 | ML-6 | |

| Diff-LibriTTS: | |||

| ML-30 | |||

September 2021